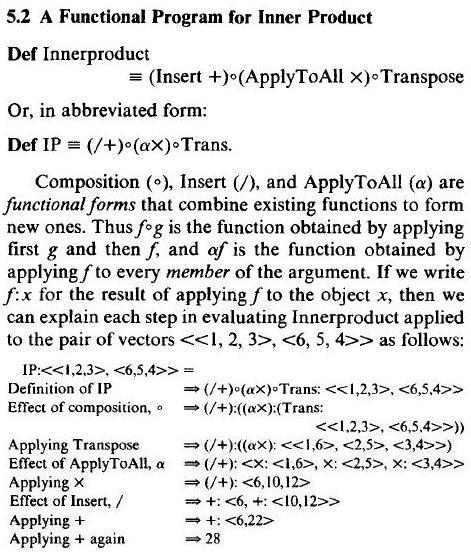

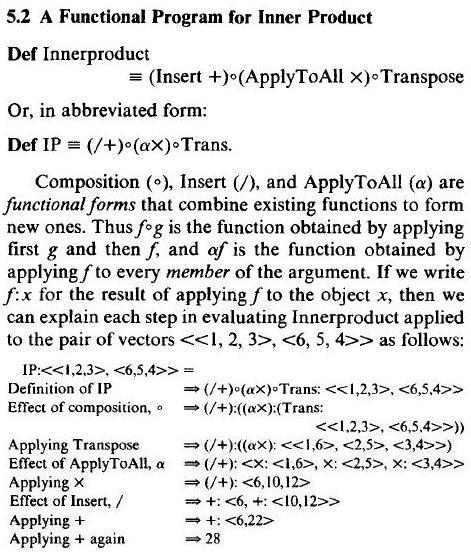

5.1 A von Neumann Program for Inner Product

int c = 0; for (int i=0; i < n; ++i) c = c + a[i] * b[i]

5.1 A von Neumann Program for Inner Productint c = 0; for (int i=0; i < n; ++i) c = c + a[i] * b[i]

|

partition( not1( bind2nd(

modulus(), 2)))" may be closer to functional programming than to traditional

C programming. I was reminded of John Backus' Turing Award lecture from 1978. He

divided between the applicative model (which includes functional programming) and

the Von Neumann model (that he characterized as word-at-a-time style of

programming). Here are excerpts below, and two contrasting implementations

to the right."Von Neumann programming languages use variables to imitate the computer's storage cells; control statements elaborate its jump and test instructions; and assignment statements imitate its fetching, storing, and arithmetic. The assignment statement is the von Neumann bottleneck ... Surely there must be a less primitive way of making big changes in the [computer] store than by pushing vast numbers of words [a large percentage of which are merely "names" to the data] back and forth through the von Neumann bottleneck. Not only is this tube a literal bottleneck for the data, but, more importantly, it is an intellectual bottleneck that has kept us tied to word-at-a-time thinking instead of encouraging us to think in terms of the larger conceptual units of the task at hand."

"Associated with the functional style of programming is an algebra of programs whose variables range over programs and whose operations are combining forms ... the use of combining forms for creating programs. Functional programs deal with structured data, are often nonrepetitive and nonrecursive, are hierarchically constructed, do not name their arguments, and do not require the complex machinery of procedure declarations to become generally applicable. Combining forms can use high level programs to build still higher level ones in a style not possible in conventional languages."

"For twenty years programming languages have been steadily progressing toward their present condition of obesity ... every feature of a von Neumann language must be spelled out in stupefying detail ... many complex features are needed to prop up the basically weak word-at-a-time style. The result is the inevitable rigid and enormous framework of a von Neumann language."

"The assignment statement splits programming into two worlds. The first world comprises the right sides of assignment statements. This is an orderly world of expressions, a world that has useful algebraic properties (except that those properties are often destroyed by side effects). It is the world in which most useful computation takes place. The second world of conventional programming languages is the world of statements. The primary statement in that world is the assignment statement ... This world of statements is a disorderly one, with few useful mathematical properties."

"Functional forms naturally belong to the world of expressions."

"APL versus word-at-a-time programming ... Kenneth Iverson (1964) ... lambda expressions ... new functional forms ... the effort to write one-line programs is partly motivated by the desire to stay in the more orderly world of expressions."

home implementation |

Reuse versus ReadabilityBjarne Stroustrup spoke at GoingNative 2012. The presentation is here.One of his major points was: prefer algorithms to unstructured code. His summary was: focus on algorithms (not "code") – consider generality and reuse. The example he used started at slide 50 where he recounted a developer that designed a routine to drag an item to an insertion point. The code was: 25 lines, one loop, three tests, 14 function calls. The developer and Stroustrup were concerned ... |

Is it correct? Who knows - try lots of testing Is it maintainable? Probably not, since it is hard to understand Is it usable elsewhere? No, it's completely hand-crafted to the details of the problemStroustrup went on to push for reuse of STL instead of writing custom code. Here are his first and second refactoring.

// slide 51 ... comprehensible, maintainable, special purpose

void drag_item_to( Vector& v, Vector::iterator source, Coordinate p ) {

// Find the insertion point

Vector::iterator dest = find_if( v.begin(), v.end(), contains(p) );

if (source < dest)

rotate( source, source+1, dest ); // from before insertion point

else

rotate( dest, source, source+1 ); // from after insertion point

}

// slide 52 ... why move only one item? ... shorter, simpler, faster, general

// Move elements for which pred() is true to the insertion point p

template

pair gather( Iter first, Iter last, Iter p, Predicate pred ) {

return make_pair(

stable_partition( first, p, ! bind(pred, _1) ), // from before insertion point

stable_partition( p, last, bind(pred, _1) ) // from after insertion point

);

}

It is not clear to me that these refactorings are obviously better than:

25 lines, one loop, three tests, 14 function calls. To understand the new

drag_item_to and gather functions, you have to

know about and have a high degree of comfort with:

STL ranges ----- [begin, end) std::find_if --- find the first element in the range [first, last) that satisfies the predicate std::rotate ---- rotates the order of elements in a range std::make_pair - create a pair without writing the types explicitly std::stable_partition - move all elements in range [beg,end) to the front that satisfy the predBut I content that templates of functions of templates of functions of ... produces code that appears (to the general population of programmers, engineers, and domain experts) to be write-only. Reading that code later is too hard. The "cost of ownership" to the organization of that kind of code is hard to justify.

function( function<template>( [&lambda](int x) {lambda += x; }, macro( functionAdapter(

functionObject() ), predicate ) ), parameter, *(functionPointer)() )

This kind of "mongo-length, one-statement-is-the-program" code is a way of life

in functional programming, AWK, and APL. But is it necessary and sufficient

in a heterogeneous workplace?

As an exercise of discovery, I started with Josuttis' example of stable_partition,

and rewrote it with code that is "completely hand-crafted to the details of the problem" (as

Stroustrup's slide 50 would characterize it). I think my "not invented here", "write it

from scratch" implementation is an okay choice.

Perhaps this boils down to a "functional programming" versus "imperative programming" (or von Neumann style) argument.

// Nicolai Josuttis, "The C++ Standard Library: a tutorial and reference", 1999 // p396 -- Move all even elements to the front vectorSome bullets that Stroustrup or coworkers have discussed:v(9); for (int i=0; i < 9; ++i) v[i] = i + 1; // p305 ... modulus ... function obj that takes 2 params (p1,p2) // p306 ... bind2nd ... transform binary function obj into unary function obj (bind 2 to p2) // p306 ... not1 ...... unary negation // bind2nd and not1 are "function adapters" // p395 ... stable_partition ... moves all elements in range [beg,end) to the front // for which the unary predicate returns true // (preserving their original relative order) // The book's implementation // vector ::iterator pos = std::stable_partition( v.begin(), v.end(), // std::not1( std::bind2nd( std::modulus (), 2 ) ) ); // My contrarian implementation vector ::iterator it = v.begin(), pos(it); for (int temp; it != v.end(); ++it) if (*it % 2 == 0) { temp = *it; // remember the value found v.erase( it ); // remove the even element from its current position pos = v.insert( pos, temp ); // insert the element in order in the front ++pos; // [insert() returns the pos of the new element] } cout << "first odd element: " << *pos << '\n'; for (pos = v.begin(); pos != v.end(); ++pos) cout << *pos << ' '; cout << '\n'; // first odd element: 1 // 2 4 6 8 1 3 5 7 9

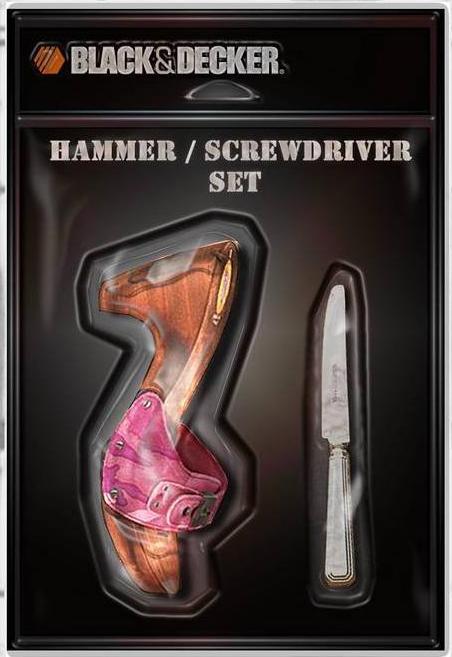

But what if you have an entire toolbox of hammers? Does that make the

"one tool fits all" attitude okay?

Silliness aside –

In the original "Jaws" movie, the Roy Scheider character comes face to face

with the shark and tells the Robert Shaw character, "You're gonna need a

bigger boat." The context here was a problem/requirements that were

initially underestimated.

The phrase "the proverbial bigger hammer" gets used a lot at my work and home.

The context is routinely: after trying every seemingly reasonable option,

it's now time for trial and error. I am reminded of the Sherlock Holmes

quote, "When you have eliminated the impossible,

whatever remains, however improbable, must be the truth?" When you

have tried everything, whatever remains, however improbable, must

be a possible answer.

Years ago I read about the "omega curve". It's a Dilbert-like

characterization of the way things work. The "bigger hammer"

oftentimes refers to the resignation of taking the long way around

just to get from here to there.

Maybe there is a mapping from "hammers" to "problem solving", and from

"nails" to "solutions" (aka algorithms).

Maybe "bubble sort" is a small nail (good emough), and "merge sort"

is a big nail (too heavyweight).

Or – maybe an elegant, small time/space algorithm is a small

nail, and a 10-page, uninspired, 9-to-5, serviceable algorithm is a

big nail.

Here are two stories that illustrate a contrast between the workman and

the craftsman – i.e. an uninspired, nose-to-the-grindstone plugger

versus a reflective, eccentric innovator.

... by: Gerald Weinberg, The Psychology of Computer Programming,

Van Nostrand Reinhold, 1971, p165 ...

Problem-avoiding behavior is intelligent behavior at its highest (although not

very intelligent if one is trying to attract the eye of a poorly trained

manager). It will always be difficult to appreciate how much trouble we are

not having, just as it will always be difficult to appreciate a really good job

of problem solving. Once the problem solution has been shown, it is easy to

forget the puzzlement that existed before it was solved.

Lacking any objective measure, we often judge how difficult a program is by how

hard a programmer works on it. Using this sort of measure, we can easily fall

into believing that the worst programmers are the best - because they work so

hard at it.

A case in point was a programmer who worked 14 hours a day, seven days a week,

for eight weeks to get a small program running in a new installation. For his

efforts, his company gave him an award for exceptional service. Shortly

thereafter, another programmer (for the first had been promoted to a management

position as an additional reward) was given the job of making some additions to

this program. He found that the program was such a confusing mess that it was

easier to rewrite it than to try and modify it.

The rewriting and debugging took exactly one week, working normal hours. Even

considering that writing a program for the second time is far easier that

writing it the first, the difference is significant. Moreover, the new program

ran eight times faster than the old, took half the storage, and was half as

many lines of coding. Clearly, the first programmer had been rewarded for

making a mountain out of a molehill.

... by: Professor Neil W. Rickert,

"The Parable of The Two Programmers",

ACM SIGSOFT Software Engineering Notes, Volume 10, Number 1, January 1985 ...

Acme and Consolidated need the identical application.

Acme hires analyst Alan. He decides to use the PQR structured design

methodology, and asks to form a team with three additional programmers. They

set to work churning out preliminary reports and problem analyses. After two

months, they release an implementation timetable. In another two months they

will have a test version of the application, followed by a two month period of

test and evaluation.

The team specifies three iterations of analysis, design, implementation, and

integration. Throughout the iterative development, much time is spent

brain-storming and negotiating the interfaces between the various modules.

Alan delivers the preliminary version one week ahead of schedule. He supplies

a list of the deficiencies that he expects to correct. During test, the users

find a number of additional bugs and deficiencies. Alan explains that this is

no surprise. After all, its a preliminary version.

At the six month milestone, the team completes the application. It is 2500

lines of code. One or two features are missing, and it is very fussy about the

format of its input. Acme decides: to put it into production, train the

data-entry staff in the strict format requirements, and have maintenance

programmers complete the missing features.

Alan is complimented for completing his project on schedule. His supervisor

looks over the deliverables. It is clear the company's coding and

documentation standards had been meticulously observed. He gives up attempting

to read the code - it seems quite incomprehensible. The application is

obviously much more complex than originally imagined. Alan is given a hefty

raise, and promoted to Senior Systems Engineer.

Consolidated hires programmer Charles. He spends some time thinking about the

problem (as opposed to the application?). His supervisor grows concerned that

he seems to spend a lot of time with his feet on the desk, drinking coffee.

Later, he divides his time between scribbling on little scraps of paper, and

drinking coffee with his feet on the desk.

After two months, his supervisor is growing tired of Charles' goofing off. He

begins to keep a close watch on Charles, and is pleased to notice that he now

seems to be busy most of the time. He has even been seen to delay his lunch,

and stay after work two or three days a week.

After three months, Charles announces the application is complete. It is 500

lines of code. The application appears to be clearly written, and when tested,

it does everything in the specifications. In fact, it even has a few

additional convenience features which might significantly improve the usability

of the program.

At first the supervisor is impressed. But as he reads through the code, he

realizes that the application is much simpler than he had originally thought.

At Charles' next salary review, the supervisor remembers the two months of

goofing off and awards a below average raise. A few months later, Charles

leaves Consolidated.

Footnote. Anyone who teaches programming courses will attest that: a five to

one ratio between the longest and shortest programs is quite typical, and the

shorter programs are usually better.

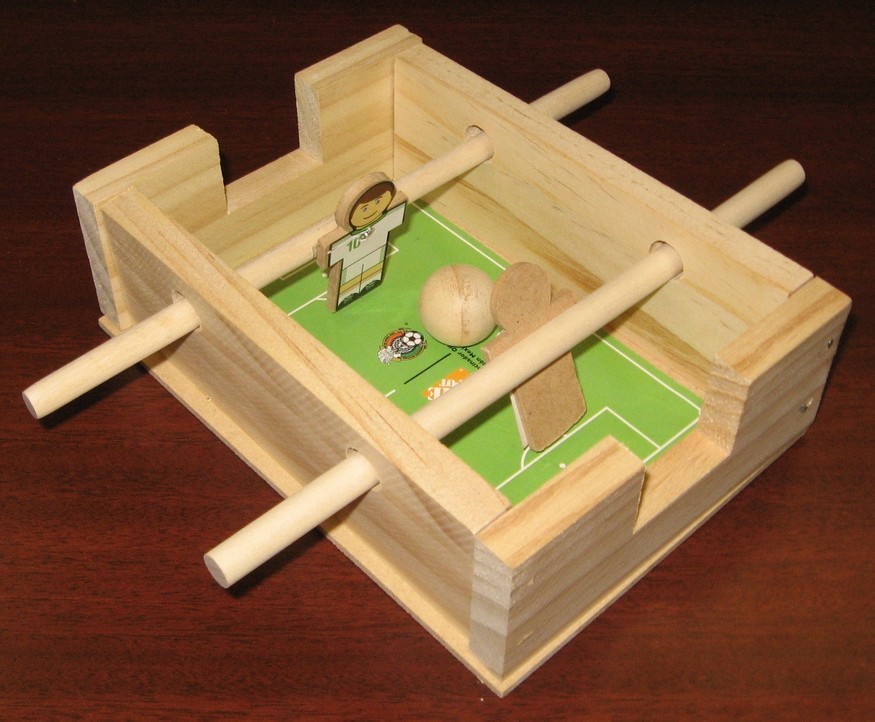

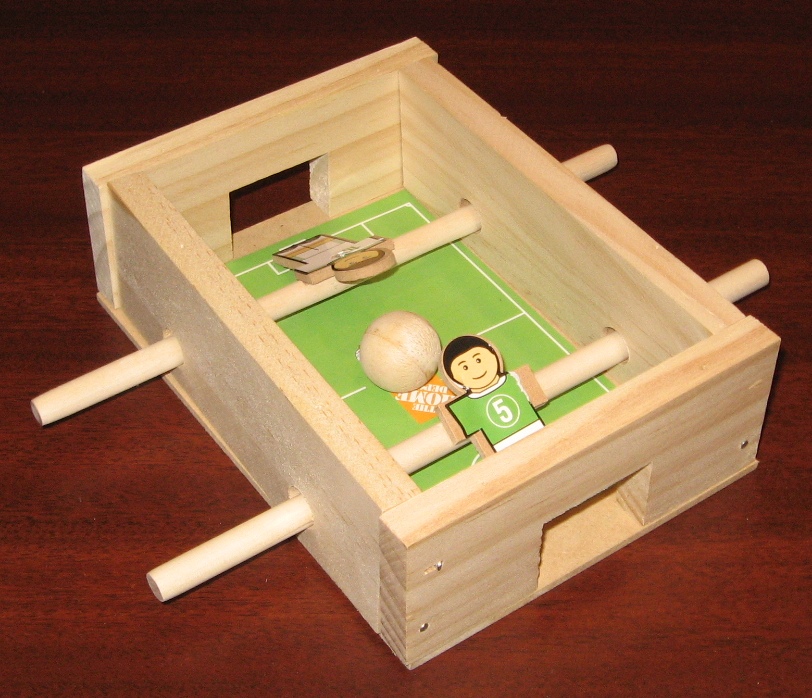

Even the multilingual accomodation was incapable of forestalling

these mistakes.

In the second project, the ignorance was truly invincible: the team that

built it complained to volunteers that they couldn't get the players to

stand up straight.

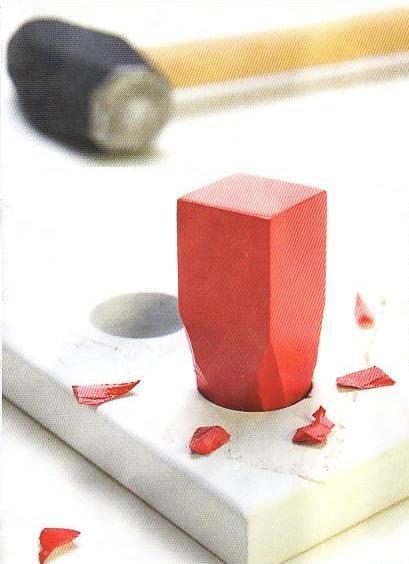

The bigger hammer

"When all you have is a hammer – every problem looks like a nail."

The bigger nail

The "bigger hammer" is a fairly ubiquitous expression, but what about

the notion of the "bigger nail"? Is it a new or different concept?

Does it offer any leverage in the domain of software?

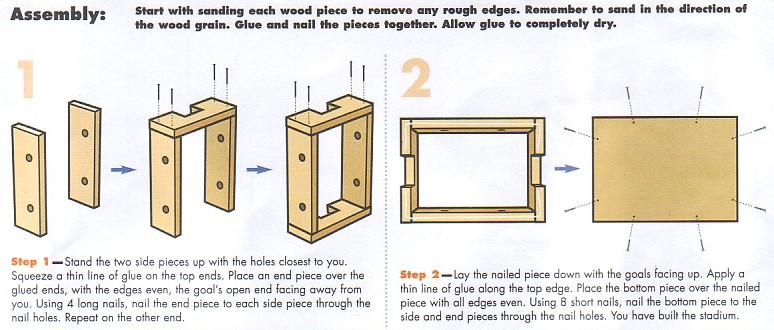

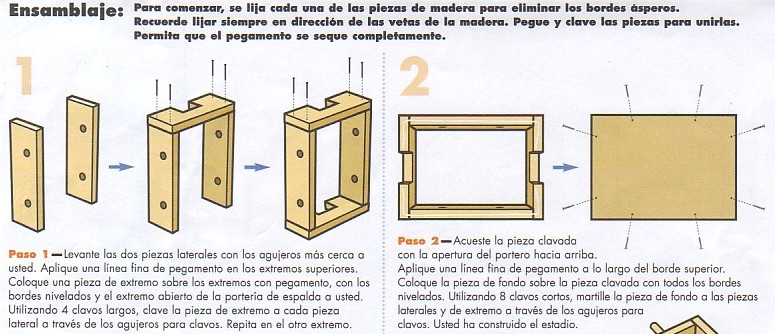

Attention to detail

In Catholic theology there is the idea of "invincible ignorance". The

all-too-human reluctance to "read the instructions" is arguably an

example of invincible ignorance. Here are 3 demonstrations of failure

to read (or even glance at) the instructions. Each of these projects was built

by a father/son or volunteer/child team during a Home Depot craft Saturday.

|

Improvise, adapt, overcomeI was closing my gate and noticed a nail had worked itself loose. I didn't feel like fetching a hammer, and I spotted a brick close by – problem solved.This product demonstrates the same kind of resourcefulness. "If you can't be with the hammer you love, then hammer the shoe you're with." |

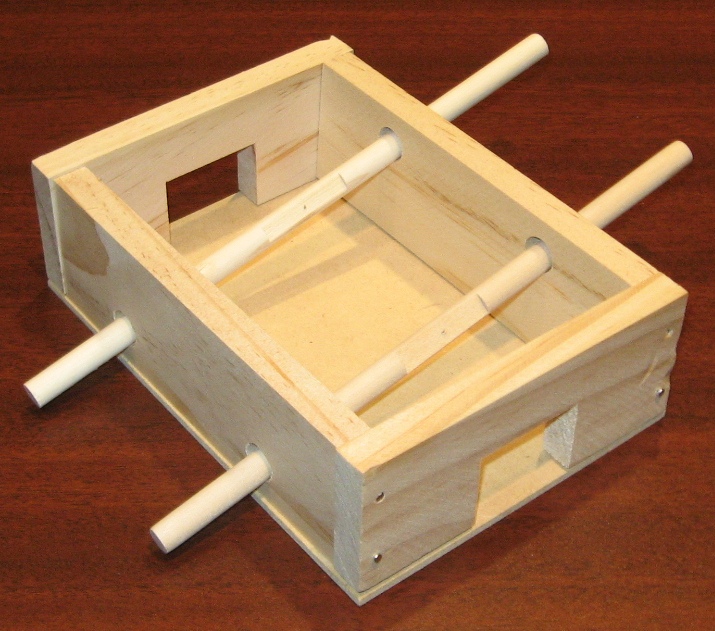

Interfaces matterSo – the interface specification calls for a round hole ... but you create a square peg instead. Not to worry ... I can make that square peg "fit" the round hole. Interfaces are how we accomodate parallel development. Consumers do not have to wait on producers if an interface has been defined that the producer implements, and the consumer uses. Once defined, both parties can proceed to their own pace, decoupled from the other, until the integration phase occurs. That makes perfect sense – what could possibly go wrong? Some of the answers are:

|

|